I recently passed the VMware Certified Professional – VMware Cloud Foundation 9.0 Administrator Exam, and I want to share some preparation advice, based on my experience.

VCP – VCF 9.0 Admin Exam: 2V0-17.25

The exam covers a lot of ground. If you are already experienced with vSphere 8.x, you could review official VMware documentation to gain familiarity with VCF and its major components, such as NSX, vSAN, Automation, and Operations. You should learn how to deploy new VCF fleets and instances, as well as how to converge and import VCF instances. Be sure to read official VMware documentation for each item identified in the exam blueprint. You should be prepared to be questioned on anything in the blueprint. For anything new to me, I copied and pasted from the official documentation into a word document (my prep notes) that I could use during my final study / memorization steps. You may wish to do likewise, or begin with my prep notes.

To gain some hands on familiarity with components or features that are new to you, you can try the VMware Hands on Labs. In some cases, you may just want to select a lab that has an environment that you need, ignore the lab exercises, and just jump in and play on your own. I used this approach to get familiar with the GUI for password rotation, certificate management, Ops Automation, etc.

After you feel like you have some knowledge, and ideally some hands on experience, with each blueprint item, then you are are ready for your final study and memorization. Here are the very, very rough notes that I compiled and used during my final round of studying.

https://vloreblog.com/wp-content/uploads/2025/11/vcf-9-admin-prep-notes.pdf

Here is Broadcom’s official page for the VCF Admin certification: link

Many of us may have some much needed downtime during the holiday season. Now may be a good time to consider taking some technical exams and earning VMware certifications.

Here are some useful links for your VCP-DCV 2021 journey.

VCP-DCV for vSphere 7.x: Official Cert Guide (Pearson / VMware Press Series): Free with your O’Reilly Subscription (for those of you that have it) or purchase directly from Pearson (including free shipping on hard bound book using code NOSHIP until Jan 8th) or from others, like Amazon.

VCP-DCV for vSphere 7.x Complete Video Course: This course may be ideal for those of you who meet all the other requirements for VCP-DCV 2021 certification, but still need to pass the exam. It covers much of the information from the Official Cert Guide, but in a condensed manner. Available from Pearson or free with your O’Reilly subscription.

VCP Exam Vouchers: From the VMware Store.

VCP Exam Discounts: Part of the Benefits for VMUG Advantage members (20% discount). Other benefits include discounts on VMware Learning Zone, classroom training, Lab Connect, and Exam Prep Workshops.

For those of you who are preparing to take the vSphere 7.x Professional Exam soon, you can now access Rough Cuts for VCP-DCV for vSphere 7.x Official Cert Guide (VMware Press, Pearson).

10 of the 14 technical chapter drafts are available today and the remainder should be ready in a few days. The chapters are the initial drafts that Steve, Owen, and I created for technical review. If you are taking the exam soon, perhaps using a discount associated with VMworld, you may find the rough cuts to be the best available tool. (To see the Guide’s chapters / outline, refer to the end of this post.)

To access the rough cuts, you can use an existing OReilly (formerly Safari Books Online) subscription or use a free 14 day trial (no credit card is needed). Use the following link to navigate directly to the Rough Cuts.

(alternatively, login to https://www.oreilly.com/ and search for VCP-DCV)

During VMworld, you may want to checkout the following sessions.

- How to Pass the VCP-DCV 2020 Certification Exam [HCP2056] – On-Demand session presented by Steve Baca and me.

- Exam Guide Review: VCP-DCV [MCOP2628] – Live, interactive session led by Steve.

You can also checkout the presentation I gave at the Kansas City VMUG UserCon: VCP-DCV 2020: vSphere 7.x Exam Prep

Here is an outline of the book and its technical chapters.

- Chapter 1. vSphere Overview, Components and Requirements

- “Do I Know This Already?” Quiz

- Foundation Topics

- vSphere Components and Editions

- vCenter Server Topology

- Infrastructure Requirements

- Other Requirements

- VMware Cloud vs. VMware Virtualization

- Summary

- Exam Preparation Tasks

- Review All the Key Topics

- Complete the Tables and Lists from Memory

- Definitions of Key Terms

- Answer Review Questions

- Chapter 2. Storage Infrastructure

- “Do I Know This Already?” Quiz

- Foundation Topics

- Storage Models and Datastore Types

- vSAN Concepts

- vSphere Storage Integration

- Storage Multipathing and Failover

- Storage Policies

- Storage DRS (SDRS)

- Exam Preparation Tasks

- Definitions of Key Terms

- Complete the Tables and Lists from Memory

- Review Questions

- Chapter 3. Network Infrastructure [This content is currently in development.]

- Chapter 4. Clusters and High Availability

- Chapter 5. vCenter Server Features and Virtual Machines

- Chapter 6. VMWare Product Integration [This content is currently in development.]

- Chapter 7. vSphere Security

- “Do I Know This Already?” Quiz

- Foundation Topics

- vSphere Certificates

- vSphere Permissions

- ESXi and vCenter Server Security

- vSphere Network Security

- Virtual Machine Security

- Available Add-on Security

- Summary

- Exam Preparation Tasks

- Review All the Key Topics

- Complete the Tables and Lists from Memory

- Definitions of Key Terms

- Answer Review Questions

- Chapter 8. vSphere Installation

- “Do I Know This Already?” Quiz

- Foundation Topics

- Install ESXi hosts

- Deploy vCenter Server Components

- Configure Single Sign-On (SSO)

- Initial vSphere Configuration

- Summary

- Exam Preparation Tasks

- Review All the Key Topics

- Complete the Tables and Lists from Memory

- Definitions of Key Terms

- Answer Review Questions

- Chapter 9. Configure and Manage Virtual Networks [This content is currently in development.]

- Chapter 10. Managing and Monitoring Clusters and Resources

- “Do I Know This Already?” Quiz

- Foundation Topics

- Create and Configure a vSphere Cluster

- Create and Configure a vSphere DRS Cluster

- Create and Configure a vSphere HA Cluster

- Monitor and Manage vSphere Resources

- Events, Alarms, and Automated Actions

- Logging in vSphere

- Exam Preparation Tasks

- Review All Key Topics

- Complete the Tables and Lists from Memory

- Define Key Terms

- Review Questions

- Chapter 11. Manage Storage [This content is currently in development.]

- Chapter 12. Manage vSphere Security

- “Do I Know This Already?” Quiz

- Foundation Topics

- Configure and Manage Authentication and Authorization

- Configure and Manage vSphere Certificates

- General ESXi Security Recommendations

- Configure and Manage ESXi Security

- Other Security Management

- Summary

- Exam Preparation

- Review All the Key Topics

- Complete the Tables and Lists from Memory

- Answer Review Questions

- Chapter 13. Manage vSphere and vCenter Server

- Chapter 14. Manage Virtual Machines

In July 2020, VMware released the VCP-DCV vSphere 7.x Exam.

Steve, Owen, and I are busy drafting our final chapters for the VCP-DCV for vSphere 7.x: Official Cert Guide (Pearson, VMware Press) and expect it to release near VMworld. Meanwhile, we hope to release Rough Cuts chapters via a subscription service within the next few weeks.

Here is a slide deck I used at the Kansas City VMUG UserCon on 8/20/2020. It contains details such as the the URL to the VMware Certification Website, which includes the Exam Guide (Blueprint). It contains a lot of tips and some practice questions. Be sure to review the Notes for each slide.

Steve and I plan to provide more details on exam preparation at the virtual VMworld later this year.

When designing an IT solution for your business, an early major step is the creation of the Conceptual Design. This design typically includes tables of requirements, constraints, assumptions, and risks. It contains one or more block level diagrams that illustrates the key functions, personas, management operations, data entities, infrastructure, etc of the conceived solution. It should map each requirement to components in the diagram that addresses the requirement.

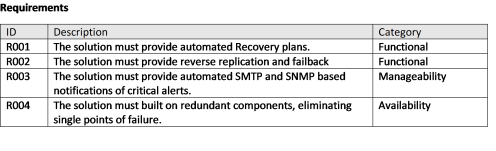

A good Requirements table contains an ID and a description of the requirement. Each requirement is an item that the solution must meet. Some requirements are functional, which describe something the solution must do. Others are technical and address one of the factors of any good system design, such as manageability, security, usability, or availability. Here are some example requirements that may be included in a Disaster Recovery (DR) Solution Conceptual Design.

Likewise, a Constraints table contains similar columns. Each constraint is something that limits potential design choices. It is something that must be “designed around”. For example, a potential constraint in a DR solution could be that no additional storage may be procured for the solution.

A solid Risks table provides an ID, Description, and Mitigation Plan for each identified risk. A risk could describe something that could go wrong with the final solution, like the failure of a specific component. In this case the mitigation may involve automatic failover to a redundant component. Typically, risks like these are actually covered in the Requirements table of the Conceptual Design, while the Risks table covers those that impact the quality or success of the design. For example, consider a scenario in a DR Design where a requirement is to protect up to 100 virtual machines. You have a risk that any projections for the total size of the virtual disks and the daily data change rate may be significantly lower than what is actually realized. If the numbers used to size the DR solution are wrong, the risk is that the DR solution cannot meet the specified RPO / RTO. A Mitigation Plan could be to trigger alerts proactively indicating that projections are soon to be exceeded. In the design’s operating procedure, you could include steps for addressing such alerts, such as adding more replication nodes.

The Conceptual Design should contain a table describing key personas and their roles. For example, the Persona Table for a DR solution may describe DR Administrators, Virtual Machine Administrators, Application Owners, Storage Administrators, Managers, and others. The Conceptual Design may include an operations table that maps specific tasks to specific personas. For example, a business’ Vice President persona may be responsible for declaring a disaster and launching the execution of a recovery plan. An application owner may be responsible for executing a DR test.

Click here for more details and help with building DR Conceptual Designs.

As I was recently delivering a VMware Professional Services engagement, I learned a valuable lesson concerning VMware vRealize Operations Manager (vROps). I should use vROps to troubleshoot vROps!

During my effort to enable a new vROps customer to successfully utilize the software to monitor, analyze, and troubleshoot their business application workloads and infrastructure, I missed the opportunity to use vROps to solve an issue with vROps. After covering how to use vROps features, such as monitoring, optimization, resource reclamation, and compliance, we ran into issues activating the vRealize Application Monitoring management pack and using the activated Service Discovery Management Pack. We successfully enabled Service Discovery on the vCenter Server adapter, but the Manage Services page did not populate as expected. We expected the Manage Services page to display all the underlying VMs, including those where service monitoring is not yet working due to issues such as wrong credentials or VMware Tools version, as you see in this example from my (MEJEER, LLC) lab environment.

Instead, the rows in the Manage Services table were empty. Additionally, the Service Discovery Adapter instance indicated the number of Objects Collected was zero, as shown here.

We expected the issue to be related to the error we received when attempting to install the vRealize Orchestrator management pack and the issue we encountered when attempting to activate the vRealize Application Monitoring management pack. Immediately, we started examining log files and other brute force efforts.

If we had simply looked in vROps for any alerts related to the vROps node (virtual appliance), we would have discovered an alert for guest file system usage and would have quickly identified the root cause and solution. Specifically, the out-of-the-box alert named One or more guest file systems of the virtual machine are running out of disk space was triggered days earlier, but had gone unnoticed because the (test) environment had many alerts

In the Service Discover adapter instance’s log file, we saw an error writing a file.

We analyzed the error and discovered the root partition in the vROps node was 100% full. The time spent from the moment we began reviewing logs until we discovered the filled root partition was about one hour. If we had simply looked at the vROps alerts, we could have discovered the filled root partition within minutes.

In my defense, the environment was new and was being used for proof of concept and user enablement. We added an endpoint to collect data from an old vSphere environment, which triggered hundreds of out of the box vROps alerts. (The customer intends to address all of the alerts in time.) So we were ignoring alerts while I provided informal hands-on training to the customer. I planned to guide the customer with creating a dashboard that provides a single-pane of glass for observing the health, alerts, performance, and risk of their management cluster, including the vROps nodes. If the vROps issue occurred after the engagement, the customer likely would have proactively caught it prior to the root partition reach 100% full (while the alert was at the warning level).

In case you are wondering, the root cause of the error was due to a known issue in vROps 8.0 that was fixed in a later version. The error is described in VMware KB 76154. The fix is to restart the rsyslog service (service rsyslog restart).

NOTE: We learned that if we caught the issue before the root partition filled, we may have been able to fix the problem by restarting the vROps appliance. BUT, if we restarted the vROps appliance after the root partition filled, it would have put the appliance in very bad state and required us to open a VMware Service Request.

As explained in this previous post (VCP-DCV 2019 Exam Prep), I posted some very rough material for preparing for the Professional vSphere 6.7 Exam 2019 (2V0-21.19), which can now be passed to earn VCP-DCV 2020 certification.

If you are preparing to take the vSphere 6.7 Exam and earn VCP-DCV 2020 Certification and if you cannot find a polished preparation tool, you may want to download the vSphere 6.7 Appendix and to use with the VCP6-DCV Official Cert Guide (VMware Press). You may also want to use my sample vSphere 6.7 Exam Subtopics document, which as explained previously, is my non-official attempt to identify sub-topics for each vSphere 6.7 exam topic.

In the past few years, I have encountered a specific scenario several times concerning different customers who are looking to reduce VSAN storage consumption in a 4 node cluster by migrating VMs to use a RAID-5 (Erasure Enclosure) policy from the RAID-1 (Mirror) policy. Here is a brief statement summarizing my opinion on the topic.

You should reexamine the requirements and decisions that were made during the design of the cluster. The decision to configure a 4 node cluster with a specific set of cache drives and capacity drives are typically based on requirements to deliver to a specific amount of usable storage with a specific level of availability. It coincides with a decision to apply a VSAN RAID-1 policy to the VMs

The VSAN RAID-1 policy means that Failures to Tolerate (FTT) = 1 and Fault Tolerance Method = performance. VSAN RAID-1 means that for each data item written to capacity drives in one ESXi host, a duplicate is placed on a second host. The minimum number of hosts required in a VSAN RAID-1 cluster is three, due to the need for an odd number of nodes for quorum. VMware recommends having N+1 nodes (4) in a VSAN cluster to allow you to rebuild data (vSAN self-healing) in case of a host outage or extended maintenance. In other words, whenever a host is offline for a significant amount of time, you can rebuild data and be protected in case of the failure of another host.

You can elect to use VSAN RAID-5 (Erasure Coding) on all or some VMs in the cluster to reduce the used VSAN space. VSAN RAID-5 means FTT=1 and Fault Tolerance Method = Capacity. Its required minimum number of nodes is 4, which your cluster has. But, VMware recommends at least 5 (N+1) nodes to allow you to rebuild data due to host outage or extended maintenance. Your cluster does not meet VMware recommendation for RAID-5.

If you do elect to use VSAN RAID-5 in the 4-node cluster, be aware of the risk during periods of a host outage or extended maintenance. In other words, whenever a host is offline for a significant amount of time, you will not be able to rebuild data and you are not fully protected in event of the failure of another host. If you decide that the risk is acceptable for some subset of your VMs and not for others, you can apply the VSAN RAID-1 and RAID-5 policies accordingly. If you want the benefit of reduced storage consumption but want to maintain the current level of availability, consider adding a 5th node to the cluster prior to implementing VSAN RAID-5.

Reference: https://blogs.vmware.com/virtualblocks/2018/05/24/vsan-deployment-considerations/

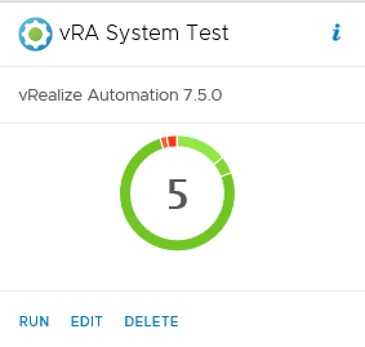

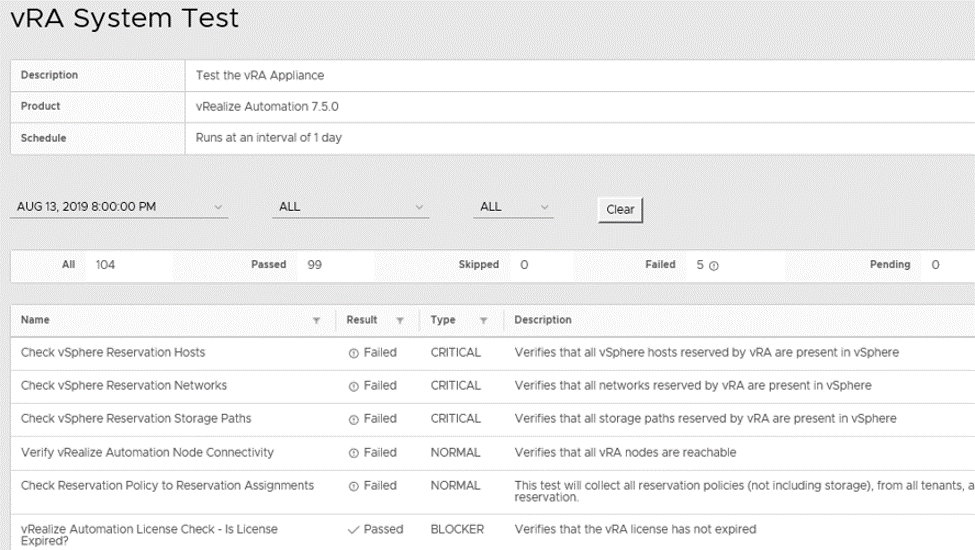

Your vRA 7.x instance can be configured to run System Tests daily. You can navigate to Administration > Health to view details of the most recent system test. In this example, five tests failed during the last run.

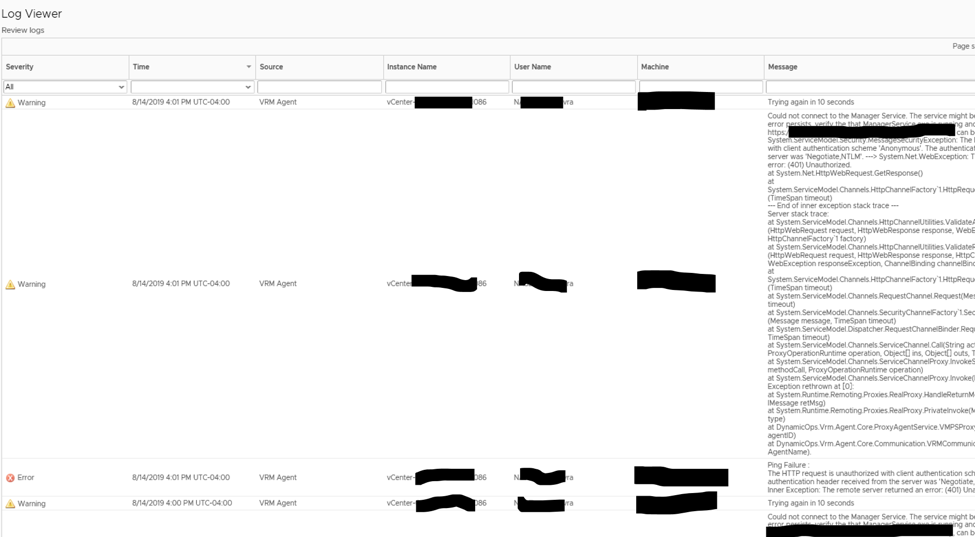

To troubleshoot specific issues or to just learn more about vRA health, you can examine its logs. Navigate to Infrastructure > Log

In this example, warnings and errors are occurring every minute.

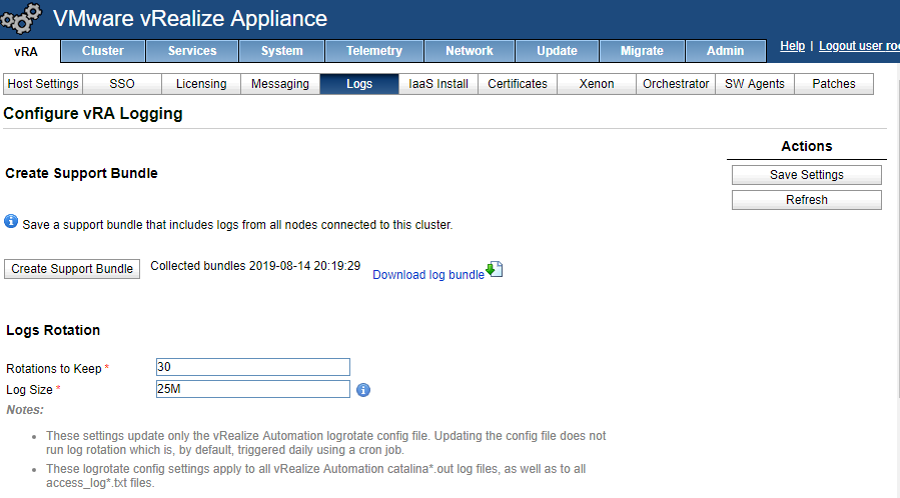

Prior to opening a vRA ticket with VMware Support, you should generate a support bundle that you can upload to VMware. Use the VAMI (https://vRA-node-FQDN:5480) and navigate to Logs. Click the Create Support Bundle button.

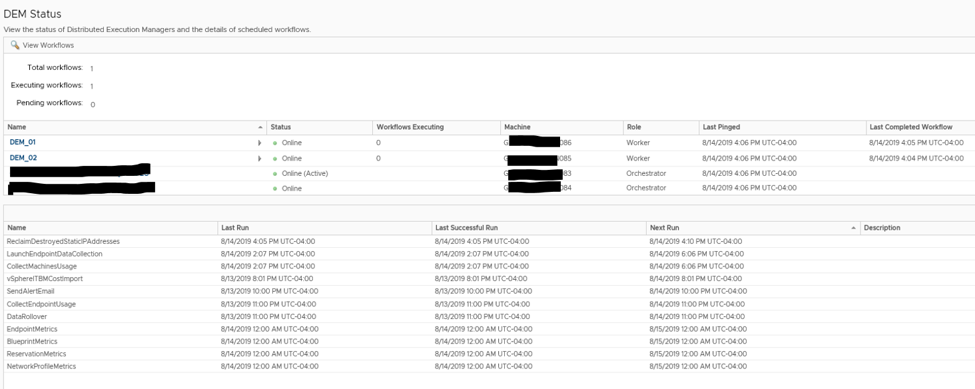

Back on the Infrastructure page, just above the Log option, you can select the DEM Status option to verify that the DEMs are running. In this example, both DEMs are Online.

You can use SSH (Putty) to logon to a vRA appliance using the root account. Here you can run this command:

vra-command list-nodes –components

This produces a lot of results about the health of each node. You should run this command and capture its results when everything is healthy in your environment. You can use the results as a baseline for comparison during times of health concerns.

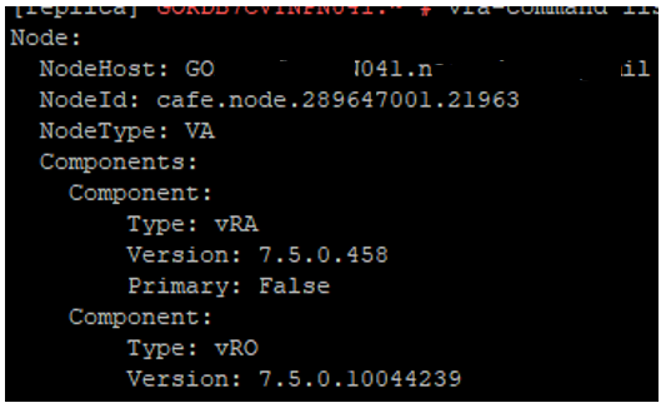

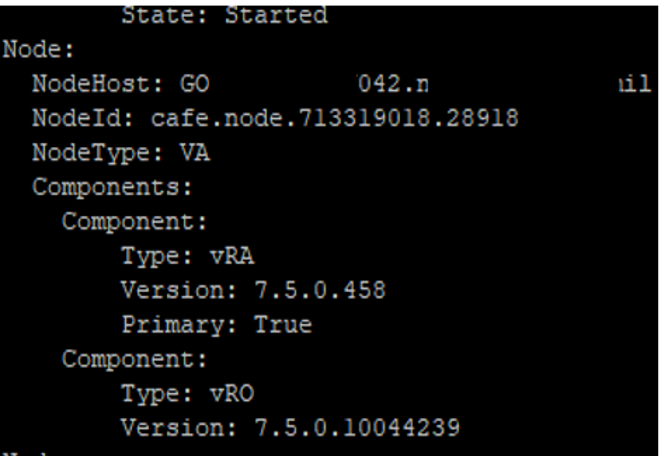

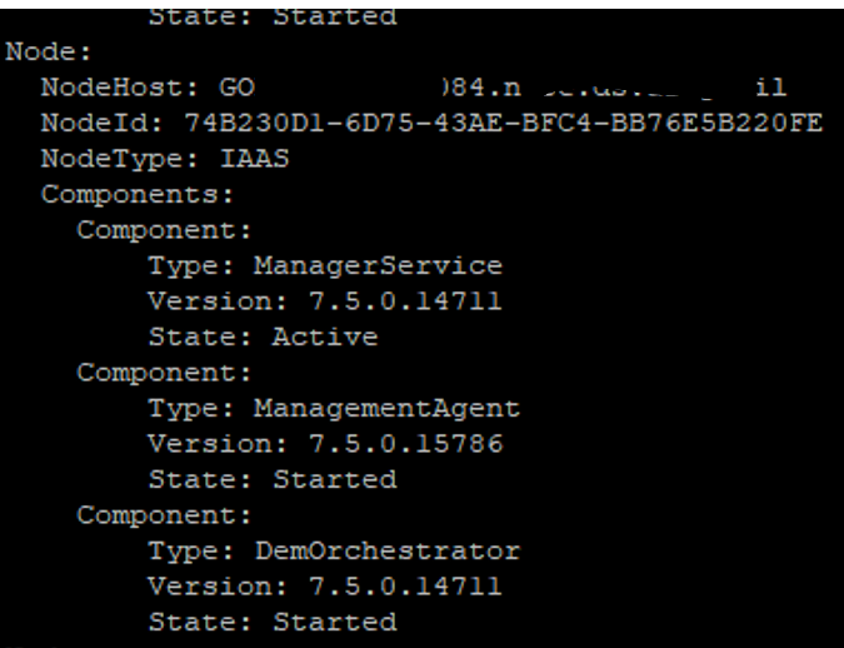

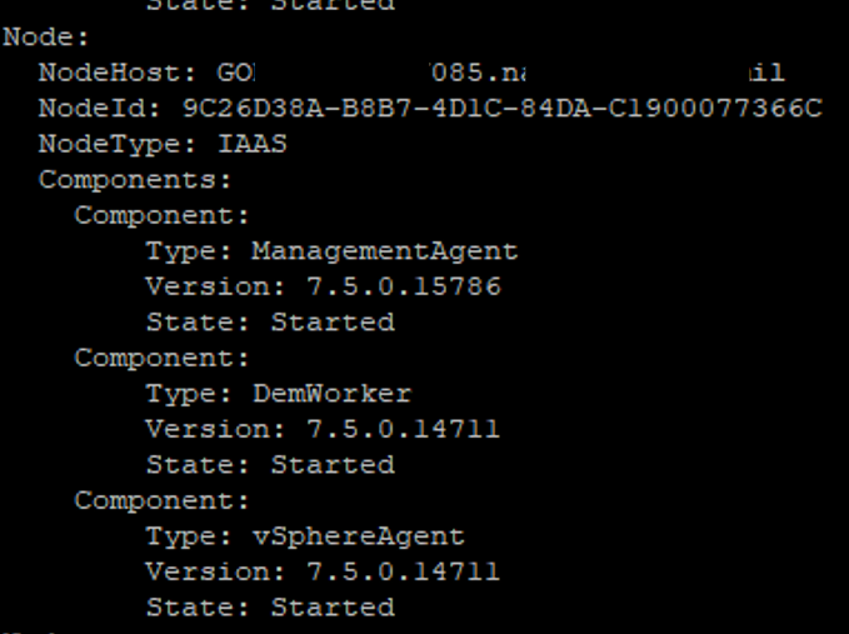

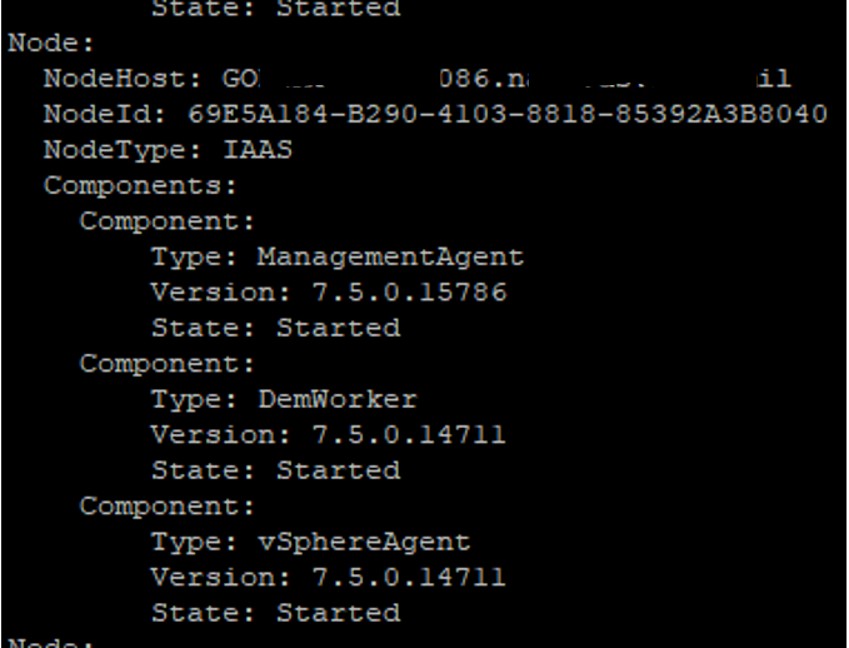

Here are example screenshots from a recent execution in a healthy environment. The full results of the command are captured as separate screenshots and organized here by node type with some explanation. In this example, we refer to each node’s name by a number, which is part of the node’s full name that is masked in the screenshot.

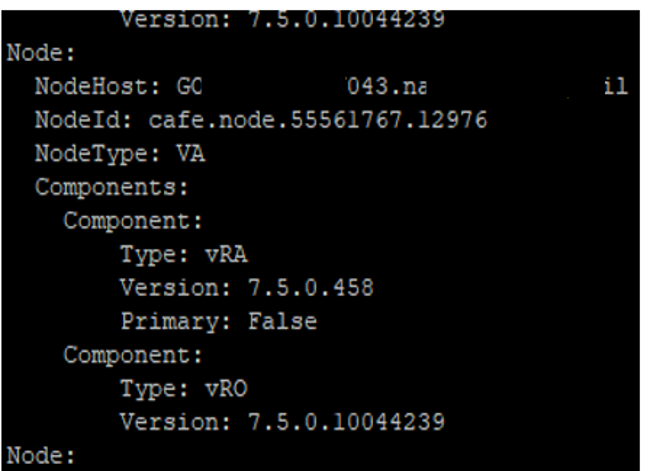

vRA appliances (nodes 41, 42, 43). In this example, node 42 is currently the Master node, as indicated by the fact that its value for Primary is true.

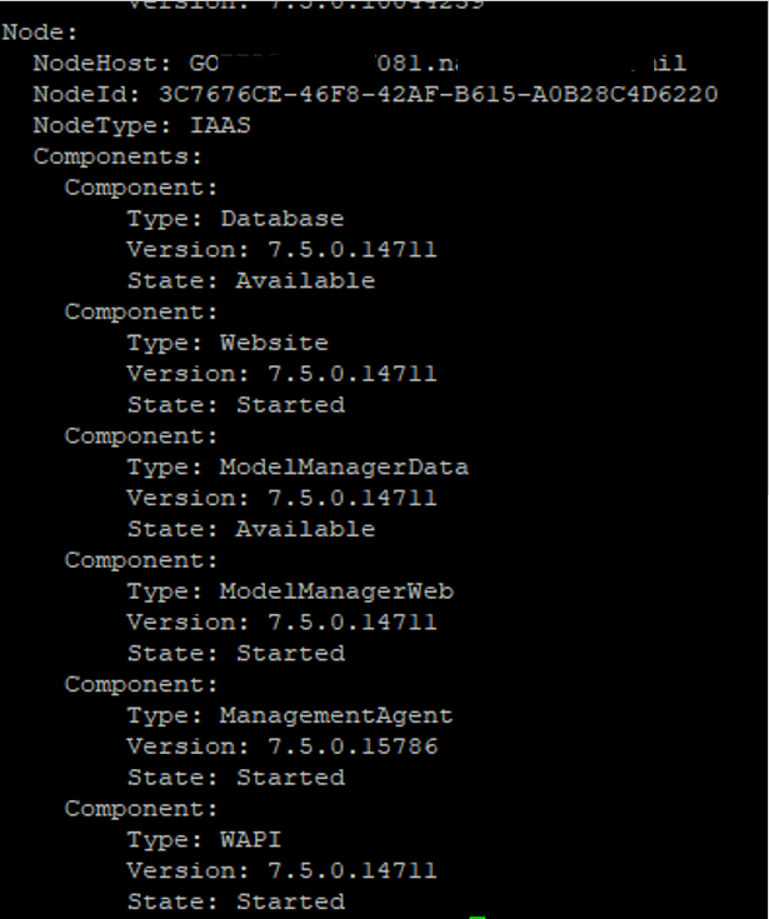

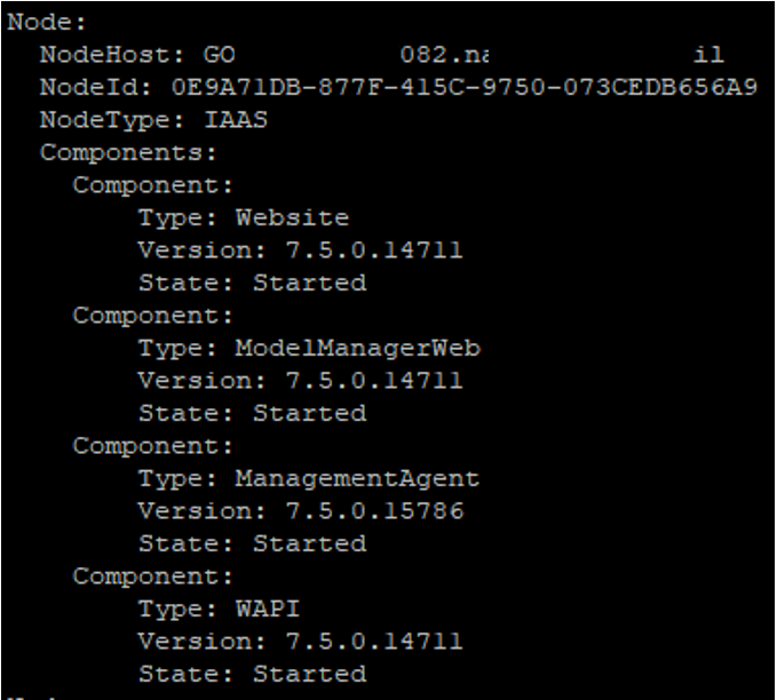

IaaS Web Server Nodes (81 and 82). Notice two components (Database and ModelManagerData) that appear for Node 81 do not appear for Node 82.

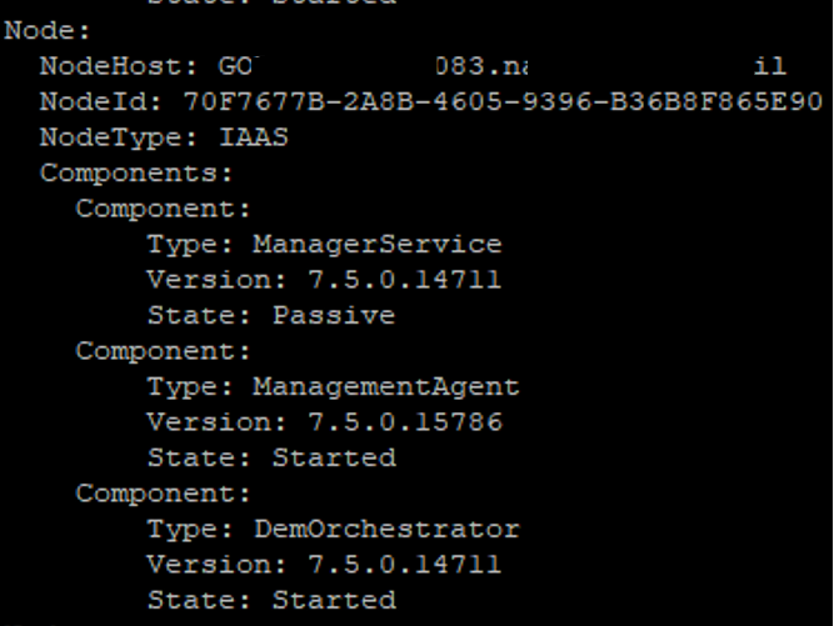

IaaS Server Nodes (83 and 84). Notice in this example, that Node 84 is the Active node and Node 83 is the Passive node.

DEM / Agent nodes (85 and 86).

vRealize Automation (vRA) 8.0 is a new animal. It is an on-premise version of VMware Cloud Automation Services, rather than an upgrade from vRA 7.x. It has four main components: vRA Cloud Assembly (build and deploy blueprints), vRA Service Broker (deliver and consume service catalogs), vRA Code Stream (implement CI/CD), and vRA Orchestrator (develop custom workflows).

In comparison to vRA 7.6 (which involves virtual appliances, Windows based IaaS components, an MSSQL database, etc), vRA 8.0 has a very simple architecture. Primarily, it consists of:

- A three node cluster of vRA appliances (or a single node when high availability (HA) is not needed)

- A three node cluster of VMware Identify Manager (vIDM) appliances (or a single node when high availability (HA) is not needed) or an existing vIDM instance.

- A vRealize Suite Life Cycle Manager (LCM) appliance.

Based on my experience, you may struggle to find any VMware Hands on Labs or other means to gain hands-on familiarity with vRA 8.0. (The Try for Free link in the my.vmware portal currently takes you to a vRA 7.x Hands on Lab, not a vRA 8.0 lab.) Like me, you may decide to deploy vRA 8.0 in your home lab. I implemented a home lab based on the following:

- Windows 10 Home running on a Dell XPS 8930 PC with an 8 core Intel i7-9700 3 GHz CPU, 64 GB RAM, 1 TB SSD, 1 TB HDD

- VMware Workstation Pro 15.5

- VMs running directly in VMware Workstation:

- vCenter Server 6.7 Appliance: 2 vCPUs, 10 GB vRAM, about 25 GB SSD storage (13 thin-provisioned vDisks configured for 280GB total)

- ESXi 6.7: 8 vCPUs, 48 vRAM, 310 GB SSD storage (2 thick-provisioned vDisks: 10 GB and 300 GB)

- Windows 2012 R2 Server running DNS with static entries for each VM: 2 vCPUs, 4 vRAM, 60 GB thin-provisioned vDisk on SSD Storage

- After the vRA installation, these virtual appliances are deployed in the ESXi VM (in a VMFS volume backed by the 300 GB SSD virtual disk)

- vIDM 3.31, 2 vCPUs, 6 GB vRAM, 60 GB total thin-provisioned vDisks

- LCM 8.0, 2 vCPUs, 6 GB vRAM, 48 GB GB total thin-provisioned vDisks

- vRA 8.0, 8 vCPUs, 32 GB vRAM, 222 GB total thin-provisioned vDisks

NOTE: Your biggest challenge in deploying vRA 8.0 in a home lab may be the fact that vRA 8.0 appliances require 8 vCPUs and 32 GB memory. After deploying vRA 8.0, I tried re configuring the vRA appliance with fewer vCPUs and less memory, but had to revert after experiencing performance and functional issues.

NOTE: If you building a home lab for the first time, here is a great reference that I used. https://www.nakivo.com/blog/building-vmware-home-lab-complete/

Fortunately for me in my relationship with VMware, I have access to free product downloads and evaluation licenses. If you do not have access to free product downloads and evaluation licenses, you may need to request a vRA 8.0 trial via your VMware Account Representative (Currently, the my.vmware portal does not appear to provide a link to a free trial).

To get started, you should use Easy Installer, which can be used to install LCM, vIDM, and vRA. It can also be used to integrate with a previously deployed vIDM instance or used to migrate from earlier versions of vRealize Suite Life Cycle Manager.

In your first attempt, you could choose to use Easy Installer to deploy the minimum architecture, which includes a single LCM appliance and a single vIDM appliance, but no vRA appliances. This enables you to verify that LCM and vIDM are deployed successfully and remediate any issues. Next, you can use LCM to deploy a single vRA appliance. Finally, you can use QuickStart to perform the initial vRA configuration.

To get familiar with the installation, I recommend that you review the How to Deploy vRA 8.0 article at VMGuru: https://vmguru.com/2019/10/how-to-deploy-vrealize-automation-8/.

To learn what Easy Installer does, refer to this link: https://docs.vmware.com/en/vRealize-Automation/8.0/installing-vrealize-automation-easy-installer/GUID-CEF1CAA6-AD6F-43EC-B249-4BA81AA2B056.html

Based on my experience, here is a summary of steps that you could use for installing vRA 8.0 in a home lab. (Be sure to use the official documentation when you are installing vRA 8.0: https://docs.vmware.com/en/VMware-vRealize-Suite-Lifecycle-Manager/8.0/com.vmware.vrsuite.lcm.80.doc/GUID-1E77C113-2E6E-4425-9626-13172A14D327.html)

Installation Steps:

To get started, download the vRealize Easy Installer and run it on a Windows, Linux, or Mac system. https://docs.vmware.com/en/vRealize-Automation/8.0/installing-vrealize-automation-easy-installer/GUID-1E77C113-2E6E-4425-9626-13172A14D327.html

Use Easy Installer to:

- Deploy the LCM appliance to your vSphere environment. https://docs.vmware.com/en/vRealize-Automation/8.0/installing-vrealize-automation-easy-installer/GUID-4D23B793-4EC8-4449-8B3A-34CB1D9A8609.html

- Deploy the vIDM appliance to your vSphere environment. https://docs.vmware.com/en/vRealize-Automation/8.0/installing-vrealize-automation-easy-installer/GUID-1C15C31B-D51F-4881-9CD1-EFB29C683EFF.html

- Skip the vRA installation.

Complete the Easy Installer wizard and monitor the installation progress.

Use LCM to install vRA 8.0 into a new environment.

vRA provides QuickStart to simply your steps for performing the initial vRA configuration, such as adding a vCenter Server cloud account, creating a project, creating a sample machine blueprint, creating policies, adding catalog items, and deploying a VM from the catalog. You can only run QuickStart once, so get familiar with it before launching it. To learn more about what QuickStart does to vRA Cloud Assembly and vRA Service Broker, refer to the Take me on a tour of vRealize Automation to see what the QuickStart did at https://docs.vmware.com/en/vRealize-Automation/8.0/Getting-Started-Cloud-Assembly/GUID-4090D3A8-49C5-4530-8359-C8265B784C80.html .

NOTE: If you choose not to use QuickStart or if something goes wrong, you can use the Guided Setup.

Use Quickstart to perform the initial vRA 8.0 configuration. https://docs.vmware.com/en/vRealize-Automation/8.0/Getting-Started-Cloud-Assembly/GUID-91597976-E472-493B-8017-2D37DC8DC0E5.html